The Art of A/B Testing in Marketplace Companies

A/B testing, also known as split testing, is a critical process for marketplace companies looking to make data-driven decisions. It's the backbone of an agile marketing strategy and product development process. But not all A/B tests are created equal. Here's how to ensure your A/B testing cuts through the noise and delivers actionable results.

Define Clear Objectives

Before you begin, know what you're testing for. Is it an increase in sales, higher click-through rates, or improved customer retention? Your goal should be Specific, Measurable, Achievable, Relevant, and Time-bound (SMART).

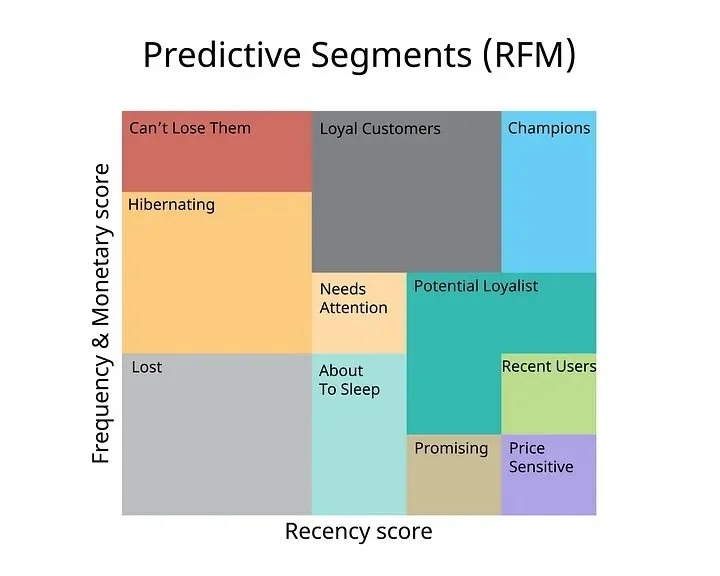

Segment Your Audience

Marketplaces have a diverse audience. Segment your users to ensure you're delivering relevant experiences and getting meaningful data. For example, new users might react differently to a change compared to returning users.

Source : https://rittmananalytics.com/blog/2021/6/20/rfm-analysis-and-customer-segmentation-using-looker-dbt-and-google-bigquery

Keep It Simple

Limit your A/B tests to one variable at a time. This way, you can be sure that any changes in user behavior are due to the alteration you've made, and not some other confounding factor.

Use the Right Tools

Leverage the power of analytics tools like Google Optimize or Optimizely. These platforms can help you design tests, segment audiences, and analyze results.

# Python pseudo-code for setting up an A/B test

from pyAB import SplitTest

# Define the A/B split

test = SplitTest('new_feature_test', variants=['A', 'B'])

# Assign users to variants

user_variant = test.assign_variant('user_id_here')

# Log user actions

test.log_conversion('user_id_here') if user_action else test.log_failure('user_id_here')

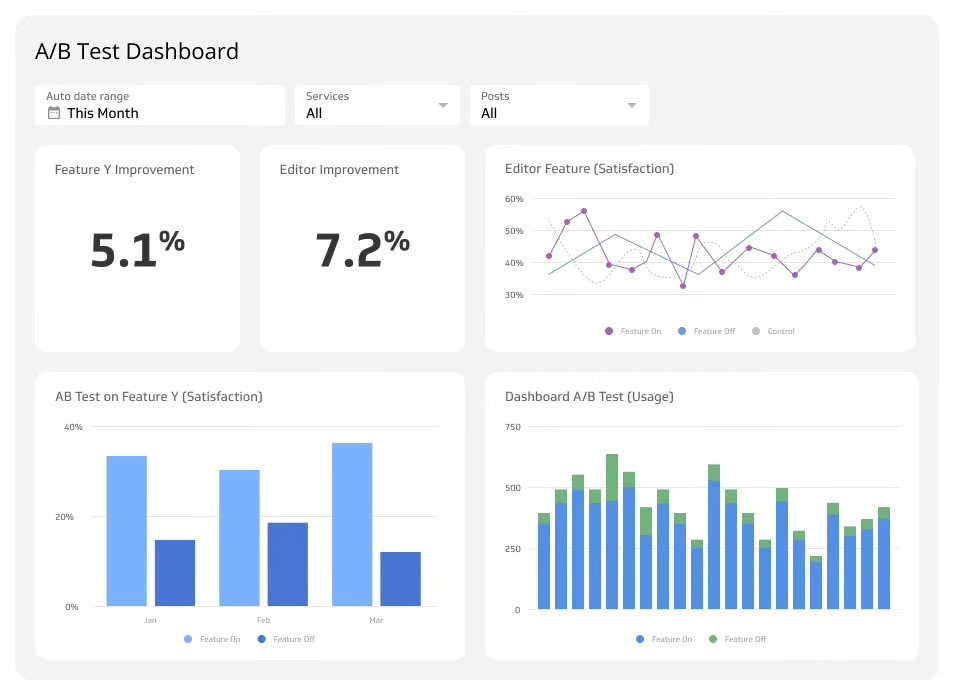

Monitor Tests Real-Time

Keep an eye on your tests as they run. If something goes wrong, you'll want to know immediately—not after you've made your decisions.

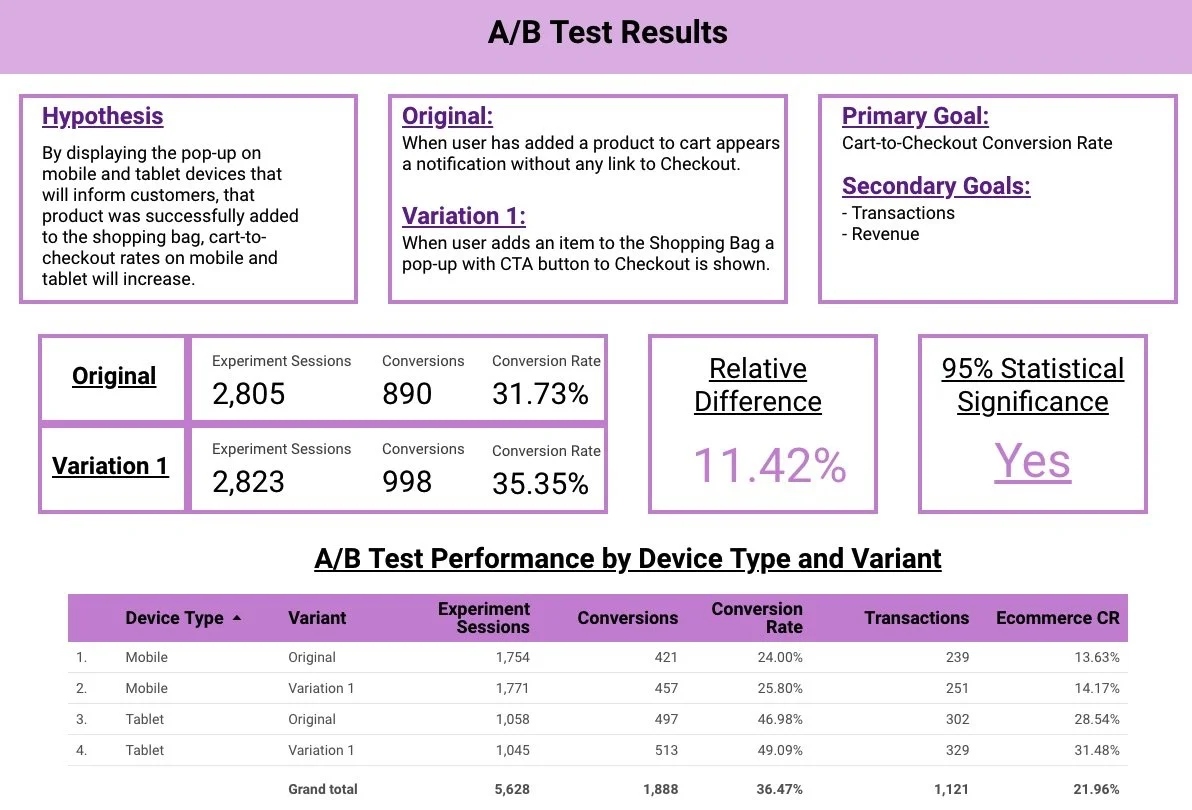

Analyze the Data

Once your test is complete, it's time to crunch the numbers. Use statistical significance to determine whether your results can be trusted.

# Python pseudo-code for calculating statistical significance from scipy import stats # Sample conversion rates and sample sizes for variant A and B conversion_rates = [0.15, 0.20] nobs = [1000, 1000] # Perform the test z_stat, p_val = stats.proportions_ztest(count=round(conversion_rates*nobs), nobs=nobs)

Communicate Results

Share your findings with all stakeholders. Even if a test fails, that's a valuable learning opportunity.

Source : https://scandiweb.com/blog/live-mode-dashboard-for-a-b-test-results-with-data-studio/

By following these best practices, marketplace companies can refine their user experience, improve engagement, and increase conversions. Remember, A/B testing is a marathon, not a sprint. The more you test, the more you learn, and the better your marketplace will perform.